Containerization

Definition

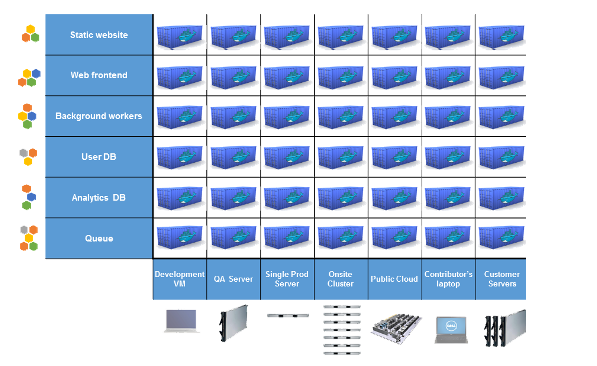

Containerization involves encapsulating applications and their dependencies into containers, allowing for efficient and consistent deployment across different environments. Containers package software in a portable, self-sufficient unit, ensuring isolation and enabling seamless deployment.

Objectives :

- Stop the « it works on my machine » syndrom

- Be the link between dev and ops

An old history (70s)

What is it?

- Software based on well known linux technologies

- Yet another layers between software and hardware

- Created to ease deployment of applications

- Starting point: cargo transport

Containers… for softwares ?

- As cargo container solved the problem of ethergenious transport mode, does software containers can solve

The market

LXC(Linux Containers): Offers lightweight, operating-system-level virtualization, enabling multiple isolated Linux systems on a single host. rkt (Rocket): Focuses on security and composability, providing a security-focused container runtime as an alternative to Docker.Docker: A widely used container platform enabling building, shipping, and running applications in containers, known for its user-friendly interface and comprehensive tooling.Podman: Docker alternative allowing container management without a daemon, offering a Docker-compatible command-line interface.LXD(Linux Containers Next): A container hypervisor with a REST API to manage system containers, aiming for a more user-friendly container management experience.Linux-VServer: Provides lightweight virtualization for partitioning a single server into isolated containers for security and resource control.VMware vSphere Integrated Containers(VIC)" for basic container deployment and management within VMware vSphere environments.

What is a container ?

History

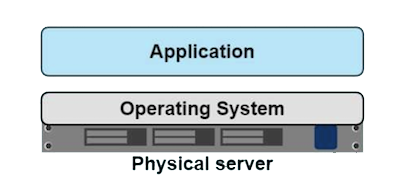

In the Dark ages : One application on One physical server

- Slow deployment times

- Huge costs

- Wasted resources

- Difficult to scale

- Difficult to migrate

- Vendor lock in

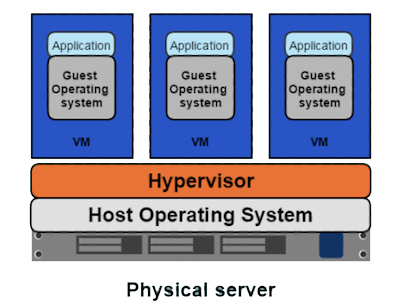

Then, virtualization : One server, multiple apps in VMs

- Better resource pooling : One physical machine divided into multiple virtual machines

- Easier to scale

- VMs in the cloud

- Rapid elasticity

- Pay as you go model

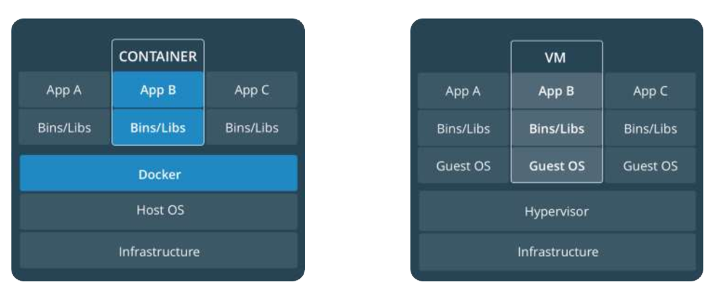

But VMs

Each VMs stills requires

- CPU allocation

- Storage

- RAM

- An entire guest operating system

The more VMs you run, the more resources you need

Guest OS means wasted resources

Application portability not guaranteed

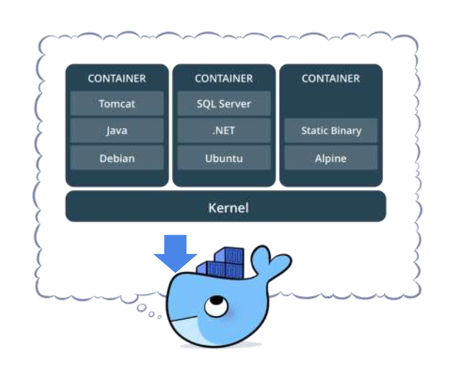

Containers

- Standardized packaging for software and dependencies

- Isolate apps from each other

- Share the same OS kernel

- Works with all major Linux and Windows distributions

Benefits

- Speed : No OS to boot = applications online in a seconds

- Portability : less dependencies between process layers = ability to move between infrastructure

- Efficiency : less OS overhead

- Improved VM density

Virtual Machines VS Containers ?

- Containers are an app level construct

- VMs are an infrastructure level construct to turn one machine into many servers

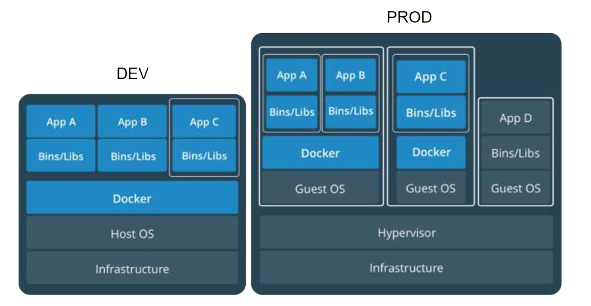

Mixed architecture

- Containers and VMs together provide a tremendous amount of flexibility for IT to optimally deploy and managed apps

Docker

History

2004: Solaris containers and Zones technology introduced

2008: Linux containers (LXC1.0) introduced

2013: Initial Release

- Salomon Hykes starts docker as an internal project within dotCloud company

- June 2013: Docker was released as an open-source project by dotCloud, revolutionizing application deployment through containers.

2014: Rapid Growth and Adoption

- March 2014: Docker 1.0 launched, gaining attention for its ease of use and portability.

- June 2014: DockerCon 2014 introduced Docker Hub, a cloud-based repository for sharing container images.

2015: Expansion and Tooling

- April 2015: Docker 1.6 brought multi-host networking for secure communication.

- June 2015: Docker Engine 1.7 introduced orchestration and scheduling tools.

- November 2015: Docker Engine 1.9 enhanced networking, storage, and security.

2016: Maturation and Enterprise Focus

- February 2016: Docker Engine 1.10 introduced secrets management.

- June 2016: Docker Datacenter launched for enterprise-scale container management.

2017: Further Enterprise Expansion

- March 2017: Docker Enterprise Edition (EE) consolidated Docker's commercial offering.

- October 2017: Docker EE 17.06 added Kubernetes support.

2018-2019: Continued Enhancements

- May 2018: Docker Desktop for Mac and Windows simplified local development.

- April 2019: Docker Desktop introduced Kubernetes support.

2020-Present: Focus on Developer Experience and Security

- June 2020: Docker 19.03 emphasized improved developer experiences.

- 2021-2022: Docker continued refining security, enhancing developer tools, and optimizing performance.

Statistics

- Docker Hub hosts millions of container images, supported by a vast community.

- Billions of downloads and adoption by millions of developers and enterprises globally.

- Remains one of the most popular containerization platforms, pivotal in container technology adoption.

Throughout its evolution, Docker transformed from a niche tool to a fundamental technology, driving container adoption due to its user-friendliness, portability, and ecosystem growth.

Inside docker

Written in GO Unique tool upon:

- LibContainer (using LXC as plugin : Cgroup and Namespacing) providing :

- Filesystem isolation: each process container runs in a completely separate root filesystem.

- Resource isolation: system resources like cpu and memory can be allocated differently to each process container, using cgroups.

- Network isolation: each process container runs in its own network namespace, with a virtual interface and IP address of its own.

- Storage Backends (devicemapper, AUFS, BTRFS, …) providing :

- Layered file system: root filesystems are created using “copy-on-write”, which makes deployment extremely fast, memory-cheap and disk-cheap.

Basic usage

Containerization platforms like Docker provide tools to create, deploy, and manage containers. Users can build containers from images, run them as instances, manage their lifecycle, and interact with them using container-specific commands.

Active Containers

docker psOutput:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES abcdef123456 my_container "/start.sh" 1 hour ago Up 1 hour 80/tcp web_serverList All Containers (Active and Inactive):

docker ps -aOutput:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES abcdef123456 my_container "/start.sh" 1 hour ago Up 1 hour 80/tcp web_server 12345abcde my_image "/bin/bash" 3 days ago Exited (0) my_containerList Images:

docker images- Pull an Image from a registry:

docker pull [OPTIONS] NAME[:TAG|@DIGEST]- Remove an Image:

docker rmi [OPTIONS] IMAGE [IMAGE...]Create a Container from an Image:

docker create [OPTIONS] IMAGE [COMMAND] [ARG...]- Start a Running Container:

docker start [OPTIONS] CONTAINER [CONTAINER...]Run a Container: ( create+start)

docker run [OPTIONS] IMAGE[:TAG|@DIGEST] [COMMAND] [ARG...]- Stop a Running Container:

docker stop [OPTIONS] CONTAINER [CONTAINER...]Remove a Container:

docker rm [OPTIONS] CONTAINER [CONTAINER...]Port Forwarding (Map Host Port to Container Port):

docker run -p HOST_PORT:CONTAINER_PORT IMAGE_NAMEExecute a Command in a Running Container:

docker exec [OPTIONS] CONTAINER COMMAND [ARG...]View Container Logs:

docker logs [OPTIONS] CONTAINER

Custom image and dockerFile

Definition

A Docker image is a self-contained, immutable snapshot or template that includes an application's code, dependencies, and configuration, serving as the foundation to create and run containers.

Create a dockerFile

# Base Image

FROM ubuntu:latest

# Maintainer Information

LABEL maintainer="Your Name <your@email.com>"

# Install Necessary Packages

RUN apt-get update && \

apt-get install -y \

package1 \

package2

# Set Working Directory

WORKDIR /app

# Copy Files/Directory to Container

COPY . /app

# Expose Ports

EXPOSE 80

# Define Environment Variables

ENV ENV_VAR_NAME=value

# Run Application

CMD [ "executable" ]

FROM ubuntu:latest

FROM: Specifies the base image for the new image being built.ubuntu:latest: Base image, in this case, Ubuntu, andlatesttag referring to the most recent version.

LABEL maintainer="Your Name <your@email.com>"

LABEL: Adds metadata to the image.maintainer: Custom label key identifying the maintainer's information.

RUN apt-get update && apt-get install -y package1 package2

RUN: Executes commands within the container during the build process.apt-get update && apt-get install -y package1 package2: Updates package lists and installs specified packages.

WORKDIR /app

WORKDIR: Sets the working directory for subsequent commands in the Dockerfile./app: Directory path within the container.

COPY . /app

COPY: Copies files or directories from the host machine to the container..: Represents the current directory on the host./app: Destination directory in the container.

EXPOSE 80

EXPOSE: Informs Docker that the container listens on specific network ports at runtime.80: Port number exposed by the container.

ENV ENV_VAR_NAME=value

ENV: Sets environment variables inside the container.ENV_VAR_NAME=value: Name-value pair for an environment variable.

CMD [ "executable" ]

CMD: Specifies the default command to be executed when a container starts.[ "executable" ]: Command and its arguments to run when the container starts.

Building and Pushing an Image to Docker Hub

# Build Docker Image

docker build -t yourusername/repositoryname:tag .

# Log in to Docker Hub (Enter your Docker Hub credentials)

docker login

# Push Image to Docker Hub

docker push yourusername/repositoryname:tag

Replace placeholders:

yourusername: Your Docker Hub username.repositoryname: Name for your repository on Docker Hub.tag: Tag/version for your image (e.g.,latest).

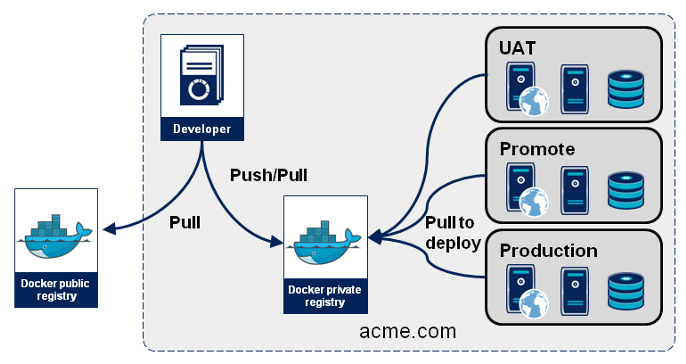

Registry

Registries store Docker images, acting as repositories where users can push, pull, and manage images. Docker Hub is a popular public registry, while private registries offer secure storage for proprietary or sensitive images within organizations.

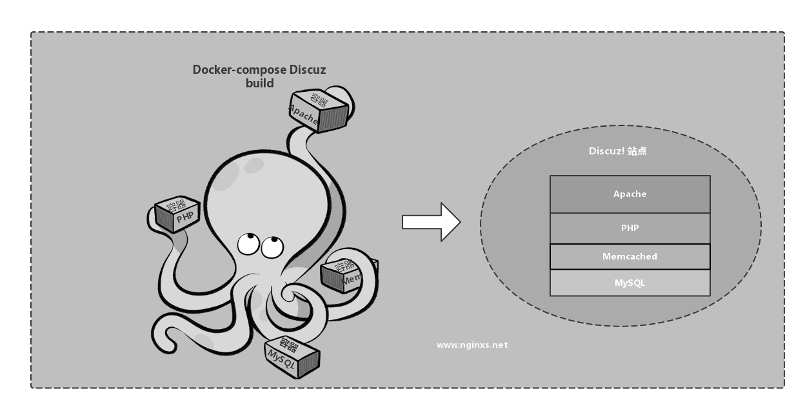

Multi-container

Docker Compose is a tool for defining and running multi-container Docker applications. It uses a YAML file (docker-compose.yml) to configure services, allowing users to define multiple containers, their configurations, networks, and volumes in a single file.

docker-compose.yml file

A Docker Compose file (docker-compose.yml) is used to define and run multi-container Docker applications. It's structured with key-value pairs and various keywords with YAML language defining each services

version: '3.8'

services:

service1:

image: imagename:tag

ports:

- "host_port:container_port"

volumes:

- "host_path:container_path"

environment:

- KEY=VALUE

command: command_to_run

version: Specifies the Docker Compose file version.services: Defines the services or containers to be created and run.image: Specifies the image to use for the service.ports: Maps ports from the host to the container.volumes: Mounts volumes from the host to the container.environment: Sets environment variables for the service.command: Overrides the default command for the service.

Basic commands

Start Services Defined in

docker-compose.yml:docker-compose upStart Services in Detached Mode:

docker-compose up -dStop Services:

docker-compose down

Exercises

🧪 Exercise 1 - Basic commands

- Install docker desktop on your computer

- Have a look at the docker hub and pull an image of an HTTP server (httpd). You can find a documentation here

- Find the commands on the course to

- Pull HTTP apache server image from docker HUB

- Start your first web server containerized with the url http://localhost:8080

- Test it on your browser

solution

# Pull the Apache HTTP Server image from Docker Hub

docker pull httpd

# Run the Apache HTTP Server container

docker run -d -p 8080:80 httpd

🧪 Exercise 2 : Image creation

Create your custom HTTP apache server image in a dockerfile that is functionnal locally and you will push it to dockerHUB You can choose your distribution and adapt RUN command accordingly. ( RedHat on the solution)

solution

FROM redhat/ubi8

RUN yum update -y # Update the system

RUN dnf install httpd -y # Install httpd

ENTRYPOINT ["/usr/sbin/httpd","-D","FOREGROUND"]

EXPOSE 80

🧪 Exercise 3 - Registry management

Create your own repository to the registry DOCKER HUB and try to push a your docker HTTPD image to your registry

solution

docker tag <IMAGE_LOCAL_ID_OR_NAME> <REPOSITORY_NAME_ON_DOCKER_HUB>/<IMAGE_NAME>:<TAG>

docker push <REPOSITORY_NAME_ON_DOCKER_HUB>/<IMAGE_NAME>:<TAG>

Tips

You can use other repository services such as Harbor

🧪 Exercise 4 - Docker compose migration

Convert your previous HTTPD image and container with a docker-compose.yml config

solution

docker-compose.yml

version: '3'

services:

web:

image: 'nginx:latest'

ports:

- '80:80'

🧪 Exercise 5 - Volume management

Map a local directory to your Apache container to serve your default index.html file of Apache in /usr/local/apache2/htdocs

solution

docker-compose.yml

services:

webservice:

image: myhttpd

build:

context: .

dockerfile: Dockerfile

ports:

- "8080:80"

volumes:

- ./site-data:/usr/local/apache2/htdocs

create apache data folder and create index.html file

$ ls ./site-data

index.html

Create a Dockerfile to build your image Dockerfile

FROM httpd:2.4

COPY index.html /usr/local/apache2/htdocs

Start your containers with docker-compose

$ docker-compose up

Creating network "dockercompose_default" with the default driver

Creating webservice ...

🧪 Exercise 6 - Practical work

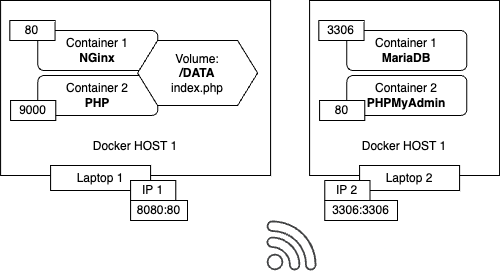

Create a LAMP achitecture with 2 computers over a WiFi network as follows with :

- an Apache / Nginx service

- a PHP service

- a mariaDB or MongoDB service

- a PHPMyAdmin or mongo-express

solution

docker-compose.yml

services:

web:

image: 'nginx:latest'

ports:

- '127.0.0.1:8090:80'

volumes:

- ./site:/site

- ./site.conf:/etc/nginx/conf.d/default.conf

links:

- php

php:

build:

context: .

dockerfile: php.Dockerfile

volumes:

- ./site:/site

- ./php.ini:/usr/local/etc/php/conf.d/php.ini

db:

image: mysql:9.1.0

environment:

MYSQL_ROOT_PASSWORD: my-secret-pw # Set your root password

MYSQL_DATABASE: my_database # Optional: Create a database on startup

MYSQL_USER: my_user # Optional: Create a user

MYSQL_PASSWORD: user_password # Optional: User password

volumes:

- mysql_data:/var/lib/mysql # Persist data

- ./create_db.sql:/docker-entrypoint-initdb.d/create_db.sql:ro

ports:

- "3306:3306" # Expose MySQL port

restart: always # Restart policy

phpmyadmin:

image: phpmyadmin

restart: always

ports:

- 9092:80

environment:

- PMA_ARBITRARY=1

- PMA_HOST=db

- PMA_USER=my_user

- PMA_PASSWORD=user_password

- PMA_PORT=3306

volumes:

mysql_data:

php.ini

#Activate PDO

extension=pdo.so

extension=pdo_mysql.so

create_db.sql

CREATE DATABASE my_database;

CREATE TABLE my_table (

id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY,

name VARCHAR(30) NOT NULL,

email VARCHAR(50) NOT NULL,

phone VARCHAR(20) NOT NULL

);

INSERT INTO my_table (name, email, phone) VALUES ('John', 'john@example.com', '555-555-5555');

INSERT INTO my_table (name, email, phone) VALUES ('Jane', 'jane@example.com', '555-555-5555');

INSERT INTO my_table (name, email, phone) VALUES ('Bob', 'bob@example.com', '555-555-5555');

php.Dockerfile

FROM php:fpm

RUN docker-php-ext-install pdo pdo_mysql

site.conf

server {

listen 80;

index index.php index.html;

server_name 127.0.0.1;

error_log /var/log/nginx/error.log;

access_log /var/log/nginx/access.log;

root /site;

location ~ \.php$ {

try_files $uri =404;

fastcgi_split_path_info ^(.+\.php)(/.+)$;

fastcgi_pass php:9000;

fastcgi_index index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param PATH_INFO $fastcgi_path_info;

}

}

site/index.php

<!DOCTYPE html>

<html>

<body>

<h1>My First Heading</h1>

<p>My first paragraph.</p>

<?php

phpinfo();

ini_set('display_errors', 1);

ini_set('display_startup_errors', 1);

error_reporting(E_ALL);

try {

$mysqlClient = new PDO("mysql:host=db;dbname=my_database;charset=utf8", "my_user", "user_password");

$mysqlClient->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION);

echo "Connected successfully";

} catch(PDOException $e) {

echo "Connection failed: " . $e->getMessage();

}

$sql = "SELECT * FROM my_table";

$result = $mysqlClient->query($sql);

if ($result->rowCount() > 0) {

while ($row = $result->fetch()) {

echo "<p>Name: " . $row['name'] . "<br>";

echo "Email: " . $row['email'] . "<br>";

echo "Phone: " . $row['phone'] . "</p>";

}

} else {

echo "0 results";

}

?>

</body>

</html>

PDO on php:fpm

Remember that you need to activate and install the PDO extension of your PHP container

📖 Further reading

- De chroot à Docker, Podman, et maintenant les modules Wasm, 40 ans d'évolution de la conteneurisation by Thomas SCHWENDER